Job management

Instruction provides the basic commands for working with cluster — submitting job to queue, queue management and receiving of results. It is assumed, that user has already connected to the login node (instruction is available here).

Interaction with the cluster takes place through a special Linux login node with special Torque/Moab cluster client’s tools (developer’s documentation: http://docs.adaptivecomputing.com/torque/help.htm).

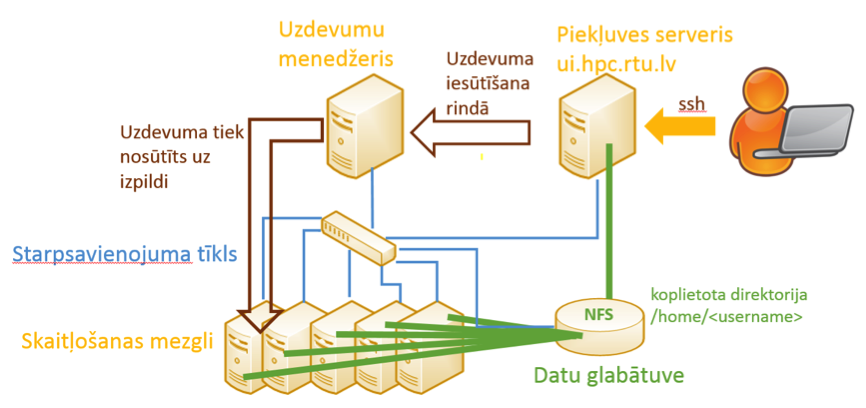

See the RTU HPC cluster architecture in the figure.

RTU HPC cluster architecture

- Each HPC user has its own workspace where the job-related files can be stored. Upon connecting to the login node command line, user will enter its working directory:

/home/

The file system between cluster nodes and login node is shared, therefore no copying of files to or from the execution node and between the nodes is necessary.

- Command for sending a simple job:

qsub test.sh

test.sh is a bash script (Linux command line) in which the user enters sequential commands for job execution in the computing node. This script ensures batch execution without user’s participation. Script may contain the following line:

__________________

echo “Sveiciens no nodes `/bin/hostname`”

__________________

This is a command for printing the node’s name. Demo script can also be found in the working directory.

Job execution output will be saved in working directory under names, like test.sh.o82 and test.sh.e82 (the first — for standard output, the other — for standard error).

Alternatively to batch job, interactive job may also be used:

qsub –I

To receive more information about the qsub command, execute: man qsub

- Command for checking the status of job execution:

qstat

R — running, C — completed, Q — queued

- Command for showing the job queue (including all users):

showq

- Command for ascertaining the available computing resources:

showbf

- Command for receiving more detailed information:

pbsnodes

- Command for parallel job execution:

Run a job on 48 cores (4 servers, 12 cores in each server)

$ qsub –q batch -l nodes=4:ppn=12 mpi.sh

Run a job without indicating specific number of nodes

$ qsub –q batch -l procs=48 mpi.sh

- Job requirements may be specified at the beginning of batch script:

#PBS -N gamess_job

#PBS -l walltime=24:00:00

#PBS -l nodes=4:ppn=12

#PBS -q batch

#PBS -j oe

- Cancellation of a job:

qdel >

- Copying of files between personal computer and login node:

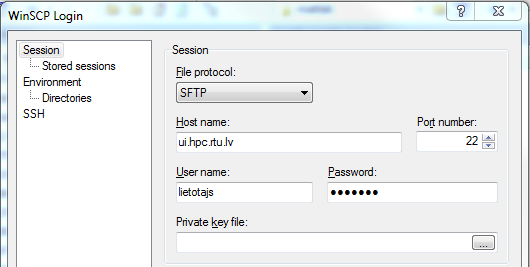

Windows users may use WinScp or Far file manager.

Connecting using WinScp is similar to PuTTY.

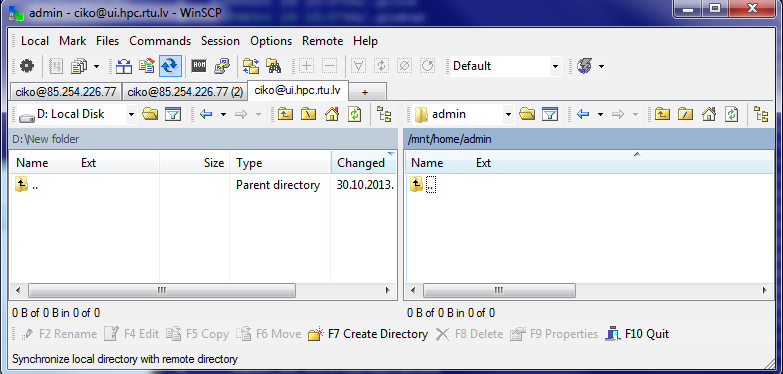

Files stored on user’s personal computer are shown on the left, while the workspace on the cluster login node is presented on the right.

- In Unix environment — command line scp or any graphical tool.

Note: Users should not execute jobs locally on the access server. Access server can only be used for job execution, program compiling, or testing of short jobs.